How Deepfakes Exploit KYC Verification Systems

Understanding the risks of AI-generated identities and how they undermine digital security measures

Onlyfake Team

Document generation experts passionate about the intersection of AI and automation. We help businesses streamline their document workflows with cutting-edge technology.

How Deepfakes Exploit KYC Verification Systems

As financial platforms and digital services rely more on Know Your Customer (KYC) protocols to verify user identities, a new threat has emerged—deepfakes. These AI-generated forgeries are being used to fool verification systems by simulating realistic faces, voices, and even full identity documents. This article explores how deepfakes are infiltrating KYC workflows, the underground networks driving this trend, and how AI can also be used to prevent such fraud.

Deepfakes and the Rise of Synthetic Identities

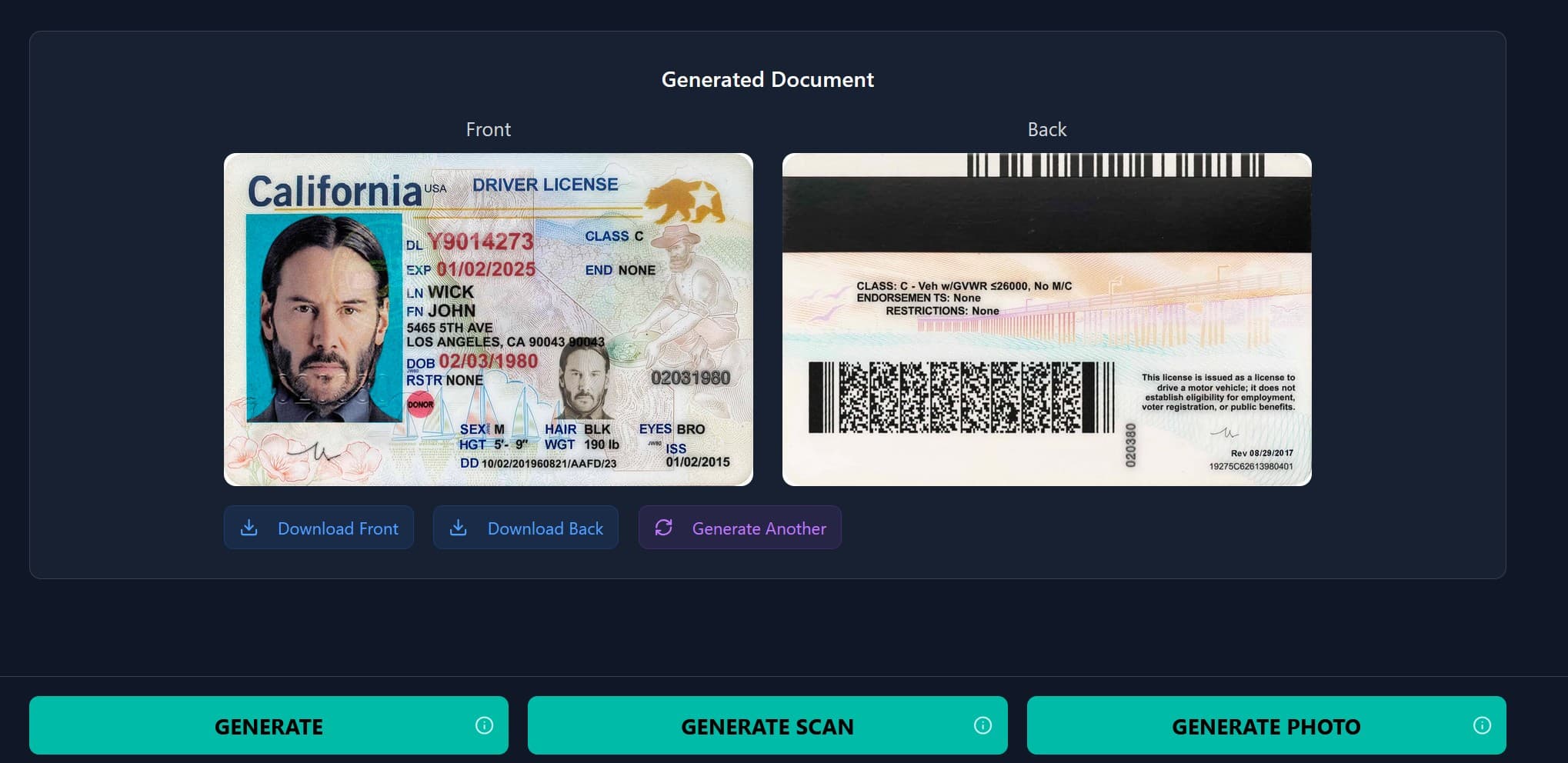

Deepfakes use neural networks and generative AI to create hyper-realistic media. In the context of identity verification, these tools are combined with passport templates, fake documents, and stolen personal data to create convincing synthetic identities. These are then used to bypass KYC checks during onboarding on financial services, crypto platforms, and digital wallets.

The Anatomy of a Deepfake Fraud Operation

Fraudsters often source their materials from Telegram channels and the dark web, where full KYC kits are sold. These kits may include AI-generated selfies, deepfake videos, and matching ID cards or driver's licenses. Such services are often marketed by pseudonymous vendors like "John Wic," who are notorious for providing tools that help users create fake identities designed to slip past identity verification systems.

What Makes KYC Systems Vulnerable?

Traditional KYC systems were built to detect human error and forgery—not AI-generated perfection. Most rely on static document analysis, image comparison, and basic liveness checks. Unfortunately, deepfakes can now mimic natural movements like blinking or smiling and align perfectly with fraudulent documents that pass visual inspections.

Real-World Exposure and Media Investigations

Investigative outlets like 404 Media have exposed marketplaces where full identity packages are sold to individuals seeking anonymity for criminal or gray-market activities. These kits not only include fake identification but also step-by-step instructions on passing KYC tests on platforms with known vulnerabilities.

Countermeasures: AI-Powered Fraud Detection

The only effective way to combat AI is with smarter AI. Emerging tools for fraud prevention incorporate behavioral analytics, 3D face mapping, randomized gesture testing, and cross-referencing document metadata. Some services even flag behavioral inconsistencies—like typing speed or device fingerprint—that don't match known legitimate user patterns.

The Ethics of Document Generation Technology

It's important to note that tools like passport templates and ID mockups are not inherently malicious. Designers, educators, and developers often use them for demos and training. However, when these resources are used to generate fake identities or facilitate document fraud, they become a serious security concern. Regulation, platform oversight, and digital watermarking can help limit misuse.

Conclusion: The Future of KYC Needs to Adapt

As deepfake technology evolves, so too must the systems designed to protect digital identities. Financial institutions and tech platforms must recognize that legacy KYC tools are no longer sufficient. By integrating AI-powered detection methods, increasing cross-platform cooperation, and proactively identifying emerging threats, the integrity of identity verification systems can be preserved.

FAQ

What are deepfakes?

Deepfakes are AI-generated videos or images that mimic real people, often used for impersonation or disinformation.

How do deepfakes exploit KYC systems?

They are used alongside fake documents to mimic real users, fooling KYC systems into verifying synthetic or stolen identities.

What is a synthetic identity?

A synthetic identity is a fabricated identity made using a mix of real and fake information, commonly used in fraud.

Can AI help detect fake identities?

Yes. Advanced fraud detection tools can analyze micro-expressions, facial movements, and document metadata to spot fakes.

Where are fake IDs and deepfakes sold?

Often through dark web markets or messaging platforms like Telegram, sold by individuals posing as identity specialists.